Some new Ryzen stats and its looking good!

- Thread starter Montoya

- Start date

Encircled Flux

Space Marshal

It totally depends on prices.

Nice how they didn't overclock the 6950x to 4ghz+

Nice how they didn't overclock the 6950x to 4ghz+

Good, this will hopefully make intel get up off it's ass and start working harder for gaming instead of just focusing on mobile.

As much as I would love for this to be true...It totally depends on prices.

Nice how they didn't overclock the 6950x to 4ghz+

It does seem that those numbers look a lot more like marketing nonsense than real world examples.

That is showing how good the CPU is at doing things that your GPU should be doing. So hooray AMD, you crammed more cores into consumer grade chips than Intel usually has, congratulations.

But that's 100% useless for anyone playing video games since we have GPUs, and game developers rarely, (ehem, never?) actually use more than 2-4 CPU cores in any case.

You're much better going for a 4 core chip with super fast clock speeds than a 10 core low clock chip if you want actual gaming performance. :slight_smile:

Now... A physics student who wants to run simulations on the CPU and has no money for a real GPU? Indeed it does look like a really nice chip.

Last edited:

DarthMatter

Space Marshal

You called? ;)Now... A physics student who wants to run simulations on the CPU and has no money for a real GPU? Indeed it does look like a really nice chip.

I haven't been in the loop on CPUs for a little while, so I'm happy to see this thread, giving me an update to look into. Might be interesting.

I thought you might like that. :)You called? ;)

I haven't been in the loop on CPUs for a little while, so I'm happy to see this thread, giving me an update to look into. Might be interesting.

It always has been.AMD becoming a real player in the field!

Never had an Intel CPU and never will.

Over Marketed, Over Hyped, Over Priced.

Just my opinion.

And I have been playing PC games for decades.

Dad jokes in a tech discussion?Go Intel! The sun is Ryzen!

only in TEST

I love the attitude around here

What he said.As much as I would love for this to be true...

It does seem that those numbers look a lot more like marketing nonsense than real world examples.

That is showing how good the CPU is at doing things that your GPU should be doing. So hooray AMD, you crammed more cores into consumer grade chips than Intel usually has, and you can perform useless calculations on them, congratulations.

But that's 100% useless for anyone playing video games since we have GPUs, and game developers rarely, (ehem, never?) actually use more than 2-4 cores in any case.

You're much better going for a 4 core chip with super fast clock speeds than a 10 core low clock chip if you want actual gaming performance rather than "hey we found 1 out of 1,000 benchmarks that makes our stuff look good." :slight_smile:

Now... A physics student who wants to run simulations on the CPU and has no money for a real GPU? Indeed it does look like a really nice chip.

Oh no you did not say that!Go Intel! The sun is Ryzen!

Fixt ;)It always has been.

Never had an Intel CPU and never will.

Over Marketed, Over Hyped, Over Priced.

Just my opinion.

And I have been playing PC games for decades. Slowly......

Floating Cloud

Space Marshal

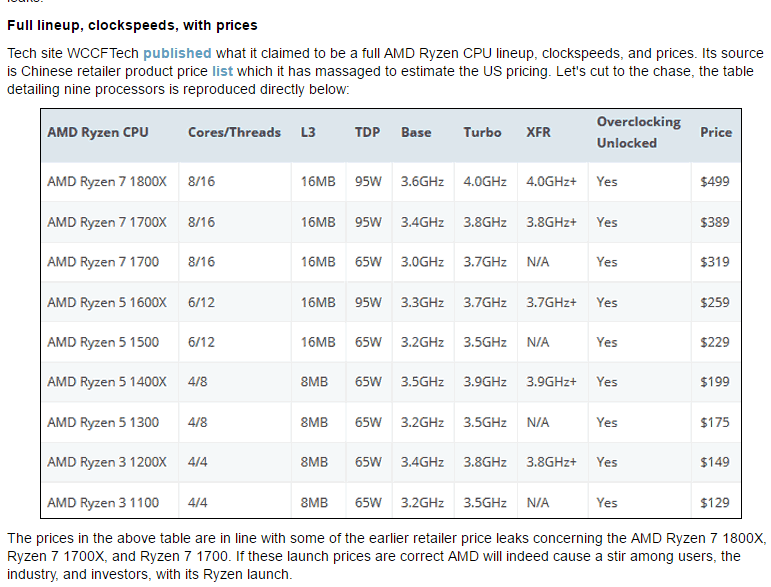

Estimated prices for the Ryzen processors. These are probably reasonably accurate.

This looks interesting in that it might be useful if cheap enough. I could imagine these in things like low-end/home render farms and simulation rigs. For gaming, I dunno, that 4ghz sounds useful, but when it comes to real world computing, it wouldn't be the first time that a cheaper or equally priced 3-3,5ghz Intel outperforms a 4ghz AMD. So who knows, gotta wait for real ppl trying it.

I always had Intel CPU-s simply because I saw my friends as they tried to be "smarter" than everyone else, and melted their cheapoo AMD rigs on a yearly basis when they overclocked it. to keep up. Ofc they didn't want to spend the difference on serious cooling... Mind you, they did the same to cheaper Intel CPUs as well. You could cook an omelette on an AMD, but you could sear a steak with a Celeron :D

I still remember the fights in the class room about someone clocking their AMD 20% higher than factory, and pissing on Intels cos you could only get them up about 10-15% before you needed to get serious cooling (and also perforation gains dropped off). What they always failed to mention was that their AMD rig only performed 5% faster with +20% boost and kept crashing every 10-15 minutes, while clocking up the mid-high end intels by 5-10% gave you an almost 1 to 1 gain in performance without the need for any changes in cooling, and remained stable.

I'm talking about the year 2000-2005 ish, I have no idea how todays bunch would perform if you tried a 10-15% OC. And I couldn't care less really.

When all is said and done, if you have a clean system with something like an i5 3,5ghz or an AMD equivalent, and a program doesn't run flawlessly, it's most likely the programmers fault, and wouldn't perform any faster with an OC. They got way too laid back and forgot how to optimise stuff while not much progress was made in raw computing power in the last decade, so the need for optimisation is still there.

AMD (Ati back in the day) and NVidia graphics cards are the same deal. You simply get what you pay for, or you pay the price later when you try to get more out of the cheaper stuff. I had a few of the early Geforce cards, then switched to Ati because I liked the cheapness. And boy, they were really cheap when it came to build quality and driver support. I hadad Asus stuff mostly with the exception of an MSi and a Sapphire card. All were crap in some way, and performed just as worse as they were cheaper than nvidia cards. Ofc the difference in build quality is much closer these days, nvidia cards have the same badly designed loud, rattling after a week kind of fans and dodgy connectors on the board the same way AMD cards have lol.

I still have a 1st gen Core2Duo (thats almost 10 years, damn I'm gettin old), and it ran 10% overclocked since day one, with it's factory sink and fan. And I only cleaned it like once a year, and it lived in an open house for at least 5 of it's years! It's a mystery how it survived all the food and dust that got in that fan... I finally retired it in December as older i5's got real cheap on the used market.

So yeah, I ain't gonna switch.

I still remember the fights in the class room about someone clocking their AMD 20% higher than factory, and pissing on Intels cos you could only get them up about 10-15% before you needed to get serious cooling (and also perforation gains dropped off). What they always failed to mention was that their AMD rig only performed 5% faster with +20% boost and kept crashing every 10-15 minutes, while clocking up the mid-high end intels by 5-10% gave you an almost 1 to 1 gain in performance without the need for any changes in cooling, and remained stable.

I'm talking about the year 2000-2005 ish, I have no idea how todays bunch would perform if you tried a 10-15% OC. And I couldn't care less really.

When all is said and done, if you have a clean system with something like an i5 3,5ghz or an AMD equivalent, and a program doesn't run flawlessly, it's most likely the programmers fault, and wouldn't perform any faster with an OC. They got way too laid back and forgot how to optimise stuff while not much progress was made in raw computing power in the last decade, so the need for optimisation is still there.

AMD (Ati back in the day) and NVidia graphics cards are the same deal. You simply get what you pay for, or you pay the price later when you try to get more out of the cheaper stuff. I had a few of the early Geforce cards, then switched to Ati because I liked the cheapness. And boy, they were really cheap when it came to build quality and driver support. I hadad Asus stuff mostly with the exception of an MSi and a Sapphire card. All were crap in some way, and performed just as worse as they were cheaper than nvidia cards. Ofc the difference in build quality is much closer these days, nvidia cards have the same badly designed loud, rattling after a week kind of fans and dodgy connectors on the board the same way AMD cards have lol.

I still have a 1st gen Core2Duo (thats almost 10 years, damn I'm gettin old), and it ran 10% overclocked since day one, with it's factory sink and fan. And I only cleaned it like once a year, and it lived in an open house for at least 5 of it's years! It's a mystery how it survived all the food and dust that got in that fan... I finally retired it in December as older i5's got real cheap on the used market.

So yeah, I ain't gonna switch.

If AMD can inject some of the magic they've put into Fiji and Polaris Graphics cards then they do stand a chance!!!

They just don't seem to be able to get on top of CPUs over the last 5 years. Not sure why.

One things for sure - whever AMD do get it right, prices on intel stuff goes down.

They just don't seem to be able to get on top of CPUs over the last 5 years. Not sure why.

One things for sure - whever AMD do get it right, prices on intel stuff goes down.

Xian-Luc Picard

Space Marshal

This. It is always this. I will believe it once it is out in the wild.It does seem that those numbers look a lot more like marketing nonsense than real world examples.

Ryzen is looking like it's going to be a major comeback for AMD. The legendary Jim Keller did his magic again. It looks like they're going to undercut Intel's lineup by literally hundreds of dollars.

ThomSirveaux

Space Marshal

As someone who has been running the same rig for 5 years, now, it's time. It's more than time. I need a new rig and to push my current setup into a streaming/3D printing rig, only.

Ryzen-sama, please save this humble PCbro.

Ryzen-sama, please save this humble PCbro.